Are the Polls Giving Dems False Hope Again?

Pollsters and partisans are once again wondering if the polling has a pro-Dem bias

I am addicted to polls. I can’t get enough of them. I lived by them when I worked on campaigns. And now that I am more a political observer than an operative (ouch!), I still consume them voraciously. I read the crosstabs. I have Twitter alerts set up for a dog/bot that tweets out every poll (that’s a real thing). I know the polls are terrible right now, but I can’t help myself. Polling is a flawed, imperfect measurement, but it’s the only way to get a sense of the political environment — how the voters feel about the candidates, what issues are popping, and what’s making people happy and mad.

The polling addicts got a rude awakening last week.

Nate Cohn — as he often does — rained on the Democrats’ recent parade of optimism with a column that sent a lot of progressives into a rage (and others into the fetal position).

Early in the 2020 cycle, we noticed that Joe Biden seemed to be outperforming Mrs. Clinton in the same places where the polls overestimated her four years earlier. That pattern didn’t necessarily mean the polls would be wrong — it could have just reflected Mr. Biden’s promised strength among white working-class voters, for instance — but it was a warning sign.

That warning sign is flashing again: Democratic Senate candidates are outrunning expectations in the same places where the polls overestimated Mr. Biden in 2020 and Mrs. Clinton in 2016.

It’s been six years since the great polling miss of 2016. We probably aren’t paying enough attention to the miss in 2020; still, as the polls predicted, Biden won. But the margins were way off in a lot of states. The industry seems no closer to solving the problem now than it was in the aftermath of Trump’s win. It’s not for lack of trying. I can only speak for the Democrats, but our polling community is filled with highly motivated, very smart individuals with massive incentives to get this right. The problem may not yet be fixed which raises the possibility that the polling problem is unfixable

Polling is the lifeblood of politics. It drives press coverage and campaign decision-making. But what if polling is fundamentally broken? What if we are viewing politics through a fun house mirror?

The Problem

Polls were never perfect. That’s what the whole ‘margin of error’ thing is all about. It is normal for polling to be off one way or another with a relatively narrow band. A normal polling error should go either way. Sometimes the polls are too pro-Democrat; other times, they are too pro-Republican. This is not what’s been happening. When the polls are wrong, they are almost always wrong in the same way. As the American Association of Public Opinion researchers put it in their post-mortem of the polling in 2020:

The 2020 polls featured polling error of an unusual magnitude: It was the highest in 40 years for the national popular vote and the highest in at least 20 years for state-level estimates of the vote in presidential, senatorial, and gubernatorial contests. Among polls conducted in the final two weeks, the average error on the margin in either direction was 4.5 points for national popular vote polls and 5.1 points for state-level presidential polls.

The polling error was much more likely to favor Biden over Trump. Among polls conducted in the last two weeks before the election, the average signed error on the vote margin was too favorable for Biden by 3.9 percentage points in the national polls and by 4.3 percentage points in statewide presidential polls.

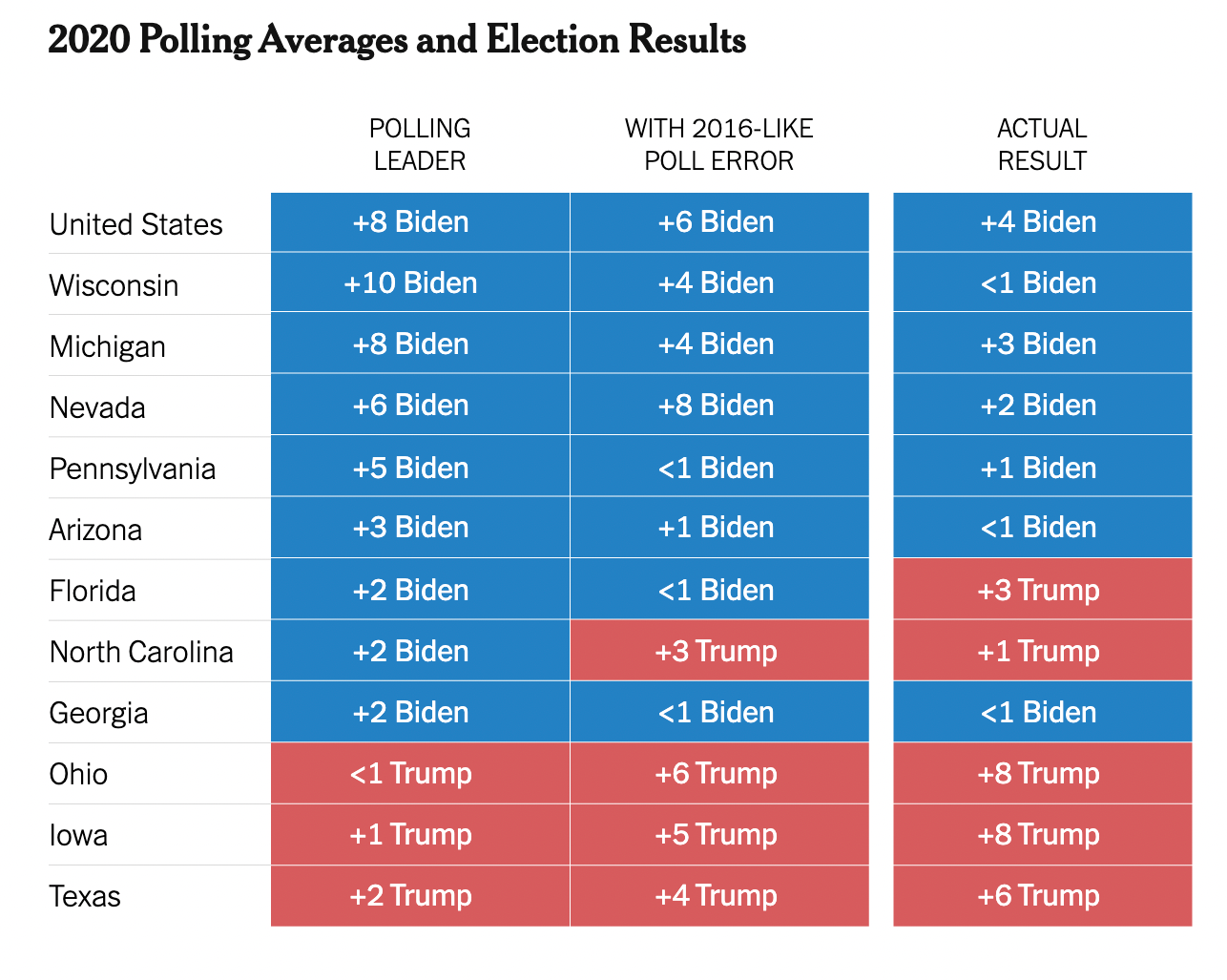

This chart from the New York Times shows just how bad things were in 2020. There was a nearly identical dynamic in 2016, and this is exactly what Nate Cohn and others worry may be happening in 2022.

In other words, the polls are repeatedly underestimating Trump voters and specifically non-college-educated white voters distrustful of institutions. The states where the error rate was the highest (Wisconsin, Ohio) are also the states with a particularly high percentage of those voters. The polling error was less severe in states where a higher percentage of the electorate were college-educated (Georgia, North Carolina). Pollsters can’t figure out how to get certain segments of Trump voters to respond to their surveys. This quiet group produces inaccurate results and a misperception about who will vote.

Non-responses from Trump voters is part of the broader problem of getting people to answer the phone in an era where everyone under the age of 65 views talking on the phone as nearly as painful as a root canal without anesthesia. When was the last time you answered a call from an unknown number? Almost all polling calls are marked “potential spam” by the iPhone. A few years ago, someone involved in the Obama data team told me that the response rate for our polls dropped 50 percent from 2008 to 2012 and then 50 percent again in 2016. It’s safe to say that Democrats aren’t the ones answering calls from unknown numbers and then spending a considerable amount of time on the phone with strangers. As pollster David Hill wrote in the Washington Post about his experience conducting tracking polls in Florida in 2020:

To complete 1,510 interviews over several weeks, we had to call 136,688 voters. In hard-to-interview Florida, only 1 in 90-odd voters would speak with our interviewers. Most calls to voters went unanswered or rolled over to answering machines or voicemail, never to be interviewed despite multiple attempts.

I explored the polling problem in a post after the 2020 election. At the time, there was a consensus within the polling industry that major changes in methodology were in order. A coalition of Democratic pollsters and the AAPOR both undertook serious efforts to figure out what went wrong in order to prescribe changes going forward. Both of their efforts ended with a shrug. While there are some notable exceptions, the vast majority of polling continues to be conducted with the same methods that produced the large errors in 2016 and 2020.

The problem boils down to this — polls don’t just tell you how people are going to vote, they are also supposed to tell us who is going to vote. When more highly engaged, educated people make up a disproportionate number of the polling universe, you will get a distorted result.

Media v. Campaign Polls

Other than getting Democratic hopes up, it’s fair to wonder why the accuracy of polling even matters. In order to answer that question, it’s important to distinguish between media polls and campaign polls. Most of what we consume are polls conducted by or for media outlets. While many of those polls are conducted by talented pollsters and with the utmost statistical rigor, their only real purpose is entertainment. They are something for reporters to write and tweet about. Polling is fodder to fill the endless hours of cable news. These polls are statistical junk food — they are enjoyable, but serve no purpose.

Political junkies (like all of us) find the polling addictive. We also consume the polling because we want to know what is going to happen, largely so we can modulate our expectations. The online rage at the pollsters in 2016 and 2020 was not borne of a passion for mathematical accuracy. We were mad because the polls gave us false hope.

Campaign polls on the other hand serve a very specific purpose. Popular culture and cynical pundits assume that politicians use polls to decide what to believe. And there are definitely some politicians who do that. Bill Clinton (in)famously polled where to go on vacation during his presidency. But for the vast majority of political campaigns, polls serve two purposes. First, they inform us about the political environment — what people care about (issues) and how they feel (satisfied, hopeful, pissed off, etc). Second, where and when to invest resources. This is particularly true for the national party committees and Super PACs. In the coming weeks, these organizations must make decisions about how much to invest in the Ohio Senate race. If the polls are correct and Tim Ryan is in a competitive race in a state Trump won by eight points, it’s a wise investment that could pay off in an expanded Senate majority that passes voting rights, codifies Roe, and raises the minimum wage. If the polls in Ohio are wrong, millions of dollars that could have been spent in other places will have been wasted. In 2020, the Biden campaign visited and invested in Ohio and Iowa down the stretch because the polling said those states were within reach. The polls were wrong. Trump won those states easily. If Biden had lost Nevada and Wisconsin by a few votes, the Ohio and Iowa efforts would have been an error of catastrophic proportions.

This is ultimately why the accuracy of polling matters so much.

The Counter-Case

Does all of this mean Democrats are doomed? Is the recent burst of Democratic optimism another case of inaccurate poll-fueled naivete?

No.

While polls may or may not be wrong in certain places, there is plenty of evidence for Democratic resurgence and an improved political environment. First, you can ignore the polls and look at the results in special elections where Democrats consistently outpace their 2020 performance. Second, while inflation is still high, gas prices have been dropping steadily for months. Finally, and most importantly, sometimes it makes sense to ditch the calculator and use your common sense. It’s obvious and apparent the Dobbs decision energized millions and millions of voters.

It’s also worth noting that the polls were much more accurate in 2018 — including in Ohio and Wisconsin.

Trump was on the ballot in 2016 and 2020 and not in 2018. So, there may be something about the voters who turn out for Trump. Without Trump, the polling inaccuracies may be absent in this election. Nate Silver makes a compelling argument against polls being inherently pro-Democratic.

People’s concerns about the polls stem mostly from a sample of exactly two elections, 2020 and 2016. You can point out that polls also had a Democratic bias in 2014. But, of course, they had a Republican bias in 2012, were largely unbiased in 2018, and have either tended to be unbiased or had a Republican bias in recent special elections.

True, in 2020 and 2016, polls were off the mark in a large number of races and states. But the whole notion of a systematic polling error is that it’s, well, systematic: It affects nearly all races, or at least the large majority of them. There just isn’t a meaningful sample size to work with here, or anything close to it

The truth is no one knows, but the results in this upcoming election will tell us a lot about if the last two presidential elections were polling flukes or if polling now is beyond repair. If it’s the former, we can go back to a healthy skepticism of polls. If it’s the latter, the art and science of politics is in for a massive overhaul.

My main piece of advice as you digest the takes from the Nates is to ignore the polls. The work we have to do between now and Election Day is the same whether the polls are right or wrong. We have to run, organize, and vote like we are behind. That approach is easier said than done, but it is undoubtedly more productive.\

Great perspective, thanks Dan. We have to assume all these races will be closer than we'd like. The work stays the same.

I think the problem with polls is the reliance on telephones. I'm 70 years old and haven't had a landline in 9 years, and never answered it before that once caller ID came about. I set my smartphone to silence unknown callers. No one wants spam calls, but if I knew it was a political pollster I'd be glad to talk to them. Leaving a voicemail won't work either because I wouldn't believe it. I think there needs to be an update to the present in the way polls are conducted, although I don't know what that is.