Processing the Persistent Polling Error

What if the polls have been wrong the whole time?

In the waning days of 2012 election, Wall Street Journal columnist Peggy Noonan wrote a universally derided column predicting that Mitt Romney would beat Barack Obama. To buttress her prediction, Noonan offered no data, demographic trends, or voting patterns. Her column was based entirely on absurd, out of touch anecdotes such as:

There’s the thing about the yard signs. In Florida a few weeks ago I saw Romney signs, not Obama ones. From Ohio I hear the same. From tony Northwest Washington, D.C., I hear the same.

There are few things more triggering to a campaign operative than people assigning outsize significance to yard signs. Of course, Obama won Ohio, Florida, and “tony Northwest Washington, D.C.” despite the yard sign deficit. The 2012 election was a victory for the data journalists like FiveThirtyEight’s Nate Silver that repeatedly argued that political reporting was more science than art. In other words, reporters too often valued anecdotes and observations over data and fundamentals. The importance of data was an article of faith in Obama-world. The campaign’s model nailed the 2012 election and we used that fact as a cudgel with reporters relying on anachronistic tools likes yard signs and interviews with random people in diners.

This undying faith in the data is why so many people (myself included) got 2016 wrong. The national polls were largely correct, but the state polls were very, very off. They under-sampled the white non-college voters that made up Trump’s base and therefore missed a major change in the political firmament.

The polling error, while unexpected was somewhat understandable. The 2016 election was quite unique — Trump was a very different candidate, both Trump and Clinton ended the election with historically high unfavorable ratings, there was an unusually large number of undecideds, and third party candidates were showing rare strength.

The 2020 election was supposed to be different. Everyone recognized the problems — polling was under-sampling Trump supporters and there were not enough high-quality battleground polls. A lot of time, effort, and money went into fixing those problems. Polls were weighted by education to ensure they included the appropriate number of non-college educated voters. The New York Times, NBC and others conducted dozens of polls of the battleground states that would decide the election.

None of it mattered. The polls were wrong again — they once again underestimated Trump’s support nationally and in the battleground states. They were almost universally wrong and wrong in the same direction. Now to be fair, polling is not an exact science. People tend to ignore the fact that most polls have a “margin of error.” that is relatively large. Expecting polls to perfectly predict outcomes is a fool’s errand, but the error should be random. When the polls are wrong in one direction, it creates a consistent misperception about the political landscape. This persistent polling error has big implications for how we understand the 2020 election and politics going forward.

What Went Wrong?

Polling has been in trouble for a long time. There are many reasons for this, but the short, somewhat overly-simplistic version is that it is getting harder and harder to get people to answer the phone from an unknown number. Response rates — the percentage of people that respond to polls — has been going down precipitously over the years.

Someone involved in the Obama campaign research and data operations told me a few years ago that the response rate for our polls dropped 50 percent from 2008 to 2012 and then 50 percent again in 2016. Theoretically, this challenge should make polling less accurate generally with some polls being too Republican and some too rosy for Democrats. But once again, that is not what is happening. The polls are being specifically wrong in one direction.

While it is too early to know for sure what happened, there are two very interesting theories making the rounds among the pollsters. Nate Cohn of the New York Times walks through these theories and more in a very thoughtful piece that I highly recommend.

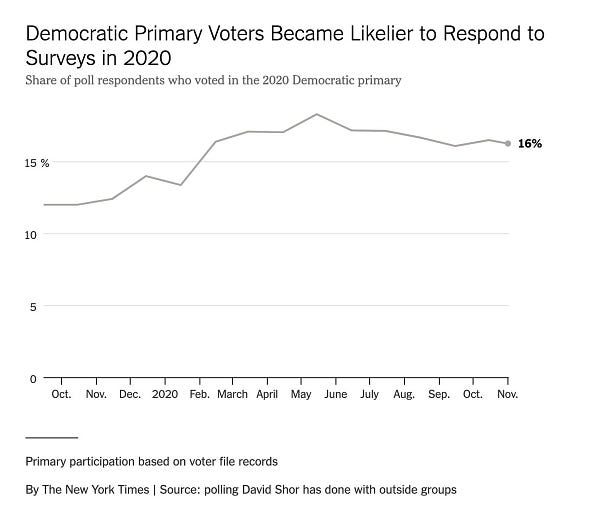

The first theory is related to the pandemic. As Democratic pollster David Shor told Cohn:

The basic story is that after lockdown, Democrats just started taking surveys, because they were locked at home and didn’t have anything else to do. Nearly all of the national polling error can be explained by the post-Covid jump in response rates among Dems.

If this theory is correct, it bodes well for future polling since the pandemic is a temporary condition that (hopefully) won’t be around next election. But if this theory is correct, it also requires us to reassess much of what we believe about how the public views the pandemic. If the polls were oversampling the people that took the pandemic most seriously, who wore masks most often, and were most fearful of opening up too soon, then we had a very incorrect view of the politics of the issue and dramatically underestimated the political efficacy of Trump’s COVID messaging. Most political analysts viewed the election through the following prism — polls say COVID is the most important issue, polls say most voters disapprove of how Trump is handling the most important issue ergo he is going to lose the election handily and take his party down with him.

The second theory takes a number of forms but boils down to the idea that the people least likely to respond to polls are also the people most likely to support Trump. One version also advocated by David Shor for a number of years says that people with low trust in institutions are less likely to respond to pollsters and these people are more likely to be Trump voters. Another related version focuses on the QANON conspiracy. A study out of the University of Southern California found that the states where polls underestimated Trump supporters had a higher prevalence of QANON believers. Emilio Ferrara, who oversaw the study told the New York Times:

The higher the support for QAnon in each state, the more the polls underestimated the support for Trump.

These are all just theories at this point and the answer is likely some form of all of the above. But if there is a correlation between social trust and poll response rates then this is a problem that will last long after the pandemic has receded. And if that is the case, it could be a very long time before we can truly trust the polls again.

Was Everything Off?

Let’s presume the problem with the polling is bigger than the pandemic — that the polling error that got 2016 and 2020 wrong has been around the whole time. If that is the case, the polls have been giving us a distorted view of the political landscape for the last four years and we have to re-evaluate all of our prior views about politics in the Trump era.

Under this scenario:

Trump has been more popular than we thought throughout his Presidency.

Divisive wedge issues like “law and order,” Antifa, and “looting in Democrat cities” that were seen as the province of Fox News fever dreams may be more impactful with more people than we believed.

The public appetite for impeachment was less than Democrats believed when the process began and ended.

Democratic policy initiatives were less popular and Republican policies more popular than we thought.

The public was more supportive of Republicans jamming Amy Coney Barrett onto the court than was assumed at the time.

I don’t want to overstate the case, the polls were off by small margins. But in a highly polarized political environment, small shifts can be critical. Trump’s approval has hovered around 44 percent for most of his presidency. That number is historically terrible and consistent with someone destined to lose reelection handily. But what if Trump’s approval rating was closer to 47 or 48 percent this entire time?

Some have pushed back on this notion by pointing out that the polls were more correct in 2018. While the national polling in that election was pretty accurate, there were a number of examples down ballot where the polls once again underestimated the strength of Republican candidates. The pre-election polls showed Bill Nelson and Andrew Gillum to be in strong positions in Florida only to have both of them lose unexpectedly. A painful result that repeated itself in Florida in 2020.

Perhaps, Democrats would have done nothing differently. Impeachment was the right course of action regardless of the polling. But it is very disorienting to realize that we may have been looking through a funhouse mirror the entire time. Political decision making (and punditry) is only as good as the data on which it is based. And it’s pretty clear a lot of the data was pretty bad.

Media Polls Vs. Campaign Polls

Most of the conversation around polling errors in 2016 and 2020 focus on the media polls that feed into the models at FiveThirtyEight, The Economist, and the Upshot. Political observers live and die by these polls. They set our moods and our expectations, but they are ultimately empty clickbait calories. It does not matter if Nate Cohn’s needle is correct or Nate Silver’s model is precise. The polling that really matters is the polling done by campaigns. That is the research that guides the messages in ads, where money is spent and where the candidates travel. Historically, campaign polling has been more precise in part because the goal is to understand the electorate as opposed to predicting the outcome. In 2020, the campaign polling was very wrong and that had huge implications for Democrats up and down the ballot. According to Roll Call, Representative Cheri Bustos, the chair of the DCCC, told her colleagues:

Our polls, Senate polls, [governors] polls, presidential polls, Republican polls, public polls, turnout modeling, and prognosticators all pointed to one political environment — that environment never materialized.

It seems very clear that Democrats and Republicans alike were equally shocked by the results. According to news reports prior to the election, Republicans with access to internal campaign polling believed there were certainly going to lose seats in the House, were likely to lose control of the Senate, and Trump was about to get trounced.

It’s worth noting that the Biden campaign’s polling seemed to be better than most. They were adamant throughout the general election that the race was closer than the public polling suggest. On Election Day, Biden Campaign Manager Jen O’Malley Dillon gave a presentation to reporters where she said they felt good about Michigan, Pennsylvania, Wisconsin, and Arizona; North Carolina, Georgia, and Florida were toss ups; and Ohio and Texas were “stretches.” This assessment turned out to be exactly right. While their polling was better than most, the last minute campaign stops in Iowa and Ohio suggest their research also underestimated Trump’s support in some states. Biden did just about as poorly in those states as Clinton did four years prior.

In the end, Biden won and Trump lost so it may be easy to dismiss these polling problems. But polling is about more than predicting winners. It’s a tool to understanding the political environment. We now know we were flying pretty blind during this election and perhaps for much longer. And that has real consequences as we chart our course forward.

Great piece -- an elegant, concise summary of the hypotheses that polling experts and campaigns are mulling over. I find the "low social trust leads to low response rates" hypothesis most compelling (though we shall see). Do we have any evidence that polling is off in similar ways in other countries? The most recent Australian national election comes to mind, when the Conservatives surprised poll watchers and won a majority.

Thanks for the insightful write up. Interested in your thoughts about Zeynep Tufekci‘s ideas on the inherent weaknesses of models, especially as they can become participants in the events they are predicting, thus influencing the events.